Continuation of Product Foundations Part 1: Ideation

The job of a Product Manager is to determine a winning strategy to achieving a company goal. They’re also responsible for ensuring that the team is working on the most valuable stuff. Strategy might make up 10-15% of the role and execution is the rest. Prioritization is essential to ensuring that the team is doing the highest value work that they can be.

Yet oftentimes people don’t put in the rigor needed at this crucial step in the process. The job of a PM is sometimes compared to being a “CEO” of that part of the product. And one of the most important jobs of a CEO is to prioritize resources to the most high-priority work. Imagine past companies where you may have felt that the projects that were resourced aren’t high-value or you didn’t understand how the decisions were made. That wouldn’t sit well with you would it? Well it probably doesn’t fly to not have a strong prioritization process as a PM either. Read on if you think prioritization is important.

Preface

A few things you’ll notice after reading this article: prioritization is less about a person, a process, or expertise in my opinion, and more about mitigating risk. It’s less about critical thinking and evaluation and more about letting the users do the prioritization for you. It’s less about creating a vision and executing against it, and more about letting the ideal product for your audience unveil itself over time by placing many many smart bets in different investment areas. They say that entrepreneurs are some of the best risk takers, but in reality they are some of the best risk-mitigators. PMs are after all the CEOs of their part of the product, and the toughest first hire at a startup for the CEO is the first product person. Why? Because of the level of responsibility and impact, also brings with it the opportunity for risk.

The different sophistication levels of prioritization

As I was just saying in the preface is that more sophisticated prioritization methods are better at mitigating risk. In fact, the most sophisticated approaches start to look similar to portfolio and hedge-fund strategies at private equity funds. After all, investment funds are some of the smartest, and most sophisticated risk-mitigators in the world. They optimize every investment for the maximum outcomes and return on their investment.

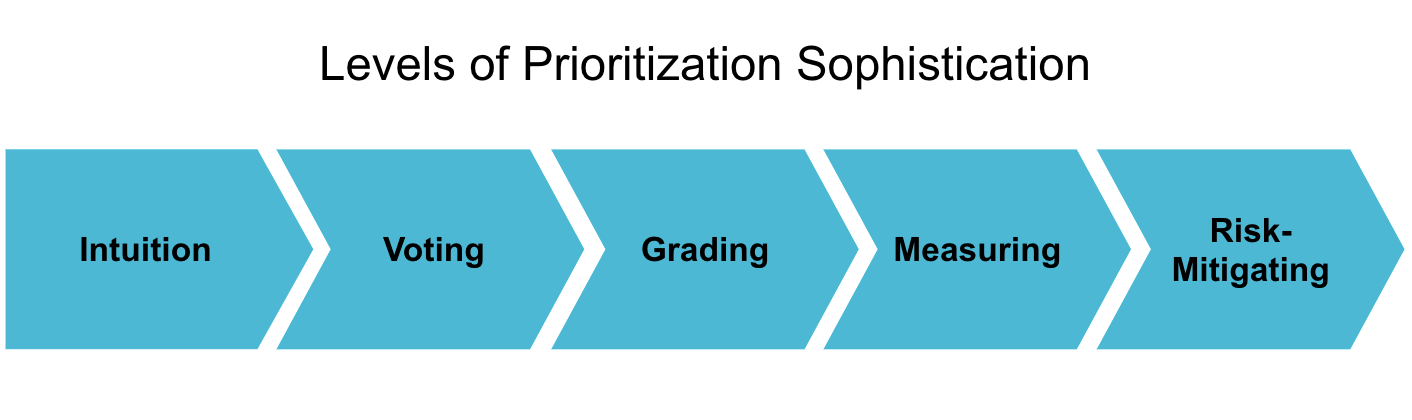

Here are the key levels I think about and have seen. It doesn’t cover all, but gives you a good way to think about it

Level 1: Intuition – This is the “I believe this will work” method. It’s not hard to see why this approach is prone to risk, well because people are often wrong! But there are some contexts which I’ll get into where intuition does make sense.

Level 2: Voting – Think of it like group intuition where you throw in some measurement. My vote is that voting is more reliable than intuition though.

Level 3: Grading – Like voting but you’ve spruced up the votes with more detail than just a simple hash mark. What about the idea warrants a hash mark? Find out by inputting grading factors.

Level 4: Measuring – With grading you’re inputting what YOU think. But how do we know for sure? With measuring you can know FOR SURE.

Level 5: Risk-Mitigating – Ok so you know for sure what the right prioritization is. Hedging, then, is the practice of overlaying categories or swimlanes on top of that. This allows you to better respond and optimize your investment strategy over the short and long-term time horizons.

Intuition – A betting man’s sport

Like to bet?? Well come on down you’re the big winner!

I will say that while intuition isn’t the highest confidence approach to prioritization, there are scenarios where it is the right strategy. At a base level, PMs need to be applying some level of critical thinking and evaluation of the roadmap

When to use intuition

If you’ve been at a company for a while or are relatively senior in the problem-space, you can draw on intuition and achieve more positive and reliable outcomes. The other instance is in early stage companies or products when you’re moving fast and don’t have enough traffic to do formal a/b testing. Leaning on intuition can help you move quickly, with less confidence, but quick nonetheless. In general, if you need to move fast, sometimes you may sacrifice confidence for speed. But really, getting that extra confidence by doing voting, grading, or looking at comparables doesn’t take that much time in the first place.

In most situations, however, using intuition as your approach for prioritizing a backlog is not effective and what people end up finding is that well, oftentimes, their intuition is wrong.

A humble-man’s thought’s on intuition

In high-velocity a/b experimentation, on average 25% of tests are winners, 25% are losers, and 50% are neutral or inconclusive.

Now, don’t get me wrong, I think every single one of our a/b experiments are the bees knees, god’s gift to the product, the most epic product improvements I’ve ever seen! But after enough of your idea-babies are taken out to pasture to be laid to rest. When your chosen feature improvement actually turns out to hurt your metric instead of help it. You learn to not trust your intuition all that much, and you start to understand that, you really just don’t know in many cases.

In fact I’d wager that in ~75% of those cases, I’ll be wrong.

Voting – 5 hash marks on epic idea #1 there, Bill

Make sure to diagonal hash the 5th one rather than a 5th straight hash mark like a PYSCHO.

Voting has an immediate advantage over intuition because of the likelihood that ideas are evaluated and prioritized correctly. Why? because you are relying on multiple perspectives rather than 1.

With intuition, there is a single failure point, but when multiple people give input, it helps counteract bias and leverages differing expertise in the group. Many times the grading will align to what a PM would have prioritized in the first place, which is great. However, on average, you find that there could be around ~30% of ideas that either wouldn’t have been at the top of the list without the voting. Or maybe they were graded too high in a PMs mind and after voting they’re not in the top quartile.

For voting to be successful you should aim to limit the number of votes someone gets (say 5 or 10). Try to make voting anonymous and randomize the options if you can so that items at the top don’t get the most votes as people read from top down. A high number of votes overall helps mitigate against some bias where people can choose to use all of their votes or not use all.

Grading

Grading is a great way to quantifiably sort a backlog according to how you perceive the cost/benefit of the ideas. The idea behind grading isn’t to be 100% accurate, it’s to ensure that there is a relative ranking across different factors that are important to you. This controls for bias and you end up with a more confident backlog. In an ideal world you have multiple people input grading on a backlog, which gets the added benefit of checking grades against multiple perspectives.

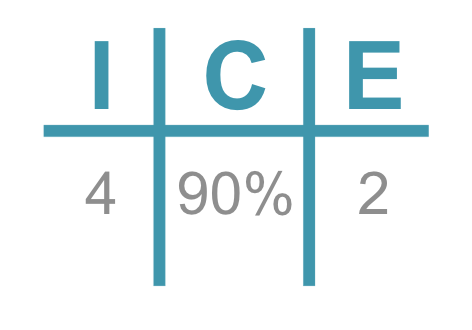

Applying a grading technique becomes more and more important as your backlog size increases, since it becomes difficult to organize and also make decision between two ideas that sound equally good. The most basic grading method I see is Impact divided by effort. Of course, this will give you a backlog that is resource-optimized towards the highest impact. I’ve talked before about the ICE method, which is a great and simple approach for grading.

Having that last grading category of Confidence is imperative. This is because, as I mentioned in the earlier intuition section, there’s a lot of bias that comes along with your own perspectives. Confidence is a measure of how much supporting data, qualitative and/or quantitative, which makes it more likely that the idea will be successful. It’s ok to not have any data, and for many ideas you don’t. But you should recognize that some ideas you have seen in comparables, or you have heard from users, or has proven successful in a different application. In my post about ideation, there are also different idea sources, which can be thought of as having different levels of confidence.

Measuring

Grading is all great and fun, but at the end of the day, if you as a human are inputting the grading, then how accurate could it be? Wouldn’t it be great if you knew what % of users that the idea would be relevant for, and how valuable it would be for them?

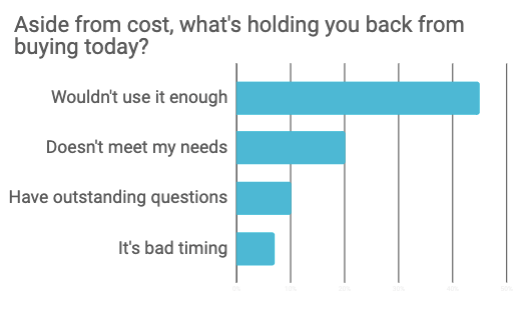

Well you can, through polling and measuring or other signals. Sometimes we do a form of measuring of a feature by doing a “hacked” test where it isn’t a real feature but it gives us s signal on whether users want it. Another way to measure is to ask users a question with some multiple choice answers they can select from. You can ask them which of these features would be relevant for you, and if solved, how valuable would that be for you? Some people also do a scatter plot to show what the most highly relevant, most valuable features are.

We use our measurements and feed them right into our ICE scoring.

Risk Mitigating

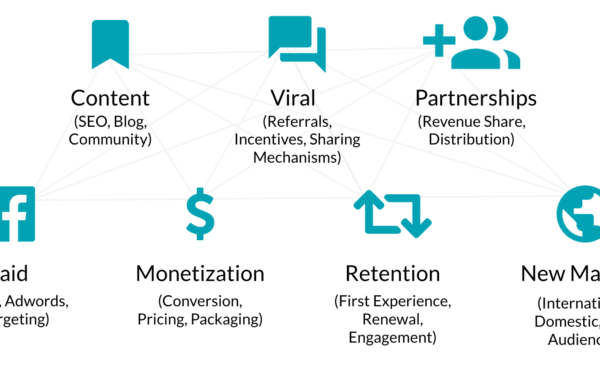

Something could score high, but what is the main objective of it and how does it play with the rest of the backlog? Or maybe it scores low, but it should be prioritized to help with the longer-term strategy.

This is where risk mitigation comes in, or rather the concept of “making smart investments”. One of the most prime examples of risk mitigators are hedge funds. They invest in some areas and balance their investments there with complimentary investments in other areas. This allows them to quickly adjust and maximize returns on their investments as the market changes, as well as minimize their risk in the event that the market changes in a way that would jeopardize their portfolio say if it was all in cruise lines and then a pandemic hits.

Portfolio

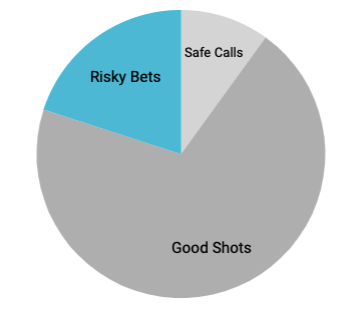

The main idea to take away from this is the idea of a backlog “portfolio”. If you have all ideas in a single backlog and rank them against each other, you end up with a strong backlog. However, there are in fact different strategies you can play with that backlog depending on your objectives and tolerance for risk. For instance, some items on the backlog might be high impact, high effort, and low confidence and therefore may not score at the top with ICE scoring, but could still be something you should do – that would typically be a “risky bet”. I think of our portfolio in terms of “safe bets”, “good shots”, and “risky bets”. You may want your portfolio to have a distribution of X%, Y%. Z%.

Depending on what stage you are in as a company, maybe you make riskier bets because you need a hail mary (hopefully not). Maybe you are somewhere in the middle. Or maybe you have a lot of cash or resources and decide you want to invest in some riskier bets that could push the envelope on innovation. In general though, in product management, I think it’s best to take a balanced approach where you aren’t doing too much safe or too much that is risky, but still want to make sure you have a little of each, but mostly in the “good bets” category. I generally shoot for 10% safe bets, 70% good shots and 20% risky bets. Sometimes going up on the risky bets side if we need to bust through a local maxima and get us more ground to work with.

swimlanes

Another aspect to consider is swimlanes. For us, we separate out our risky bets and actually de-risk them. We do this using “pilot tests” where we will do a hacked test of a product improvement that is either unproven or may lead us down a path that will require significantly more investment as we move forward. By doing a pilot test on the strategy we can get a signal on if it’s worth pursuing further.

Another swimlane we have is for “opportunistic and team generated”. This is because oftentimes ideas that come up, come up for a good reason. In some cases it makes sense or capitalize on a new development. We don’t want to have to grade these or hold them off to next quarter planning. Sometimes you need to ensure there is flexibility to move on idea opportunistically and just see what happens. I would note that this assumes that the ideas are relatively small so you can do this, or at least that you can make a small version of it to test.

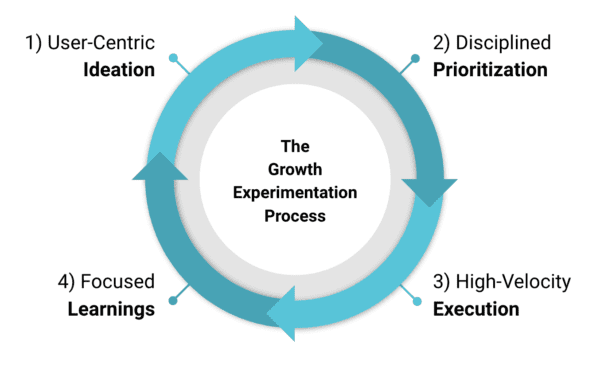

In our planning, we use measured user problems in an ICE framework at the “themes” level to identify which problems we want to solve. Then we actually do an allocation of number of tests in each theme every quarter according to our betting strategy.

FAQS

What if the CEO or an executive wants something prioritized?

Oo this is a tough one, but it does happen from time to time and you should expect it will happen more. Sometimes, if an executive wants something, you should just go ahead and do it. If it starts to happen frequently and is a habit, then you have a problem to address and want to make sure you didn’t set the wrong expectations. If the request is going to take a lot of effort, resourcing needs to be discussed. In many cases though, if you don’t do it right away that’s fine. But the key is to ensure that they feel they were heard and that you communicate in detail what will happen and then timeline so that they have the right expectations. Then make sure to follow up with them from time to time so that they aren’t wondering where it is in the backlog.

How do you communicate the prioritization approach to the rest of the company?

I think the best approach for this is to do a lunch and learn, or at an all hands. Also every quarter it’s good to do a “roadshow” of your quarterly plan and roadmap with relevant departments. You can have a slide on how you prioritized and landed on the roadmap before you share that part with them.

How often do you do prioritization?

We really do it once a quarter, or rather, revisit our prior prioritization. We do shift some things around through the quarter in terms of where we investing our individual bets, but for the most part stick within the themes that we identified.

Is prioritization different in different company sizes?

It can be, since you might want a riskier or less risky strategy depending on where you are. There is also less knowledge and data about users or less developed perspectives, so naturally you are operating with less confidence.

How do you deal with conflicts in prioritization between different goals?

Swimlanes is the best remedy for this. If you are finding that there are competing goals and frictions, it’s usually a sign you need a separate swimlane or need to deprioritize what is creating friction.

How flexible is your prioritization?

On the theme level for quarterly planning, not particularly flexible. This is intentional to ensure that we stay focused on the levers that we’ve identified to have the highest potential. It also is nice that if there are inbound requests that don’t fit in our quarterly themes, we have sound reasoning and logic to respond with as to why it’s not being prioritized. Within the themes we do allow a lot of flexibility on what hypotheses we test – this reflects the fact that you are always learning and iterating as you go, so your hypothesis prioritization should be able to flex to accommodate that.

There is also a learning benefit to staying focused on a particular theme. As you do tests and iterate your learning compiles on the theme as opposed to trying to think about too many user problems at once. Lastly, I employ a dedicated theme each quarter to “opportunistic bets” to allow for ideas that are too good to pass up but don’t quite fit in nicely with the quarterly themes.

How do you do your quarterly roadmapping and planning?

After doing user research to identify the top reasons why someone doesn’t convert, we do opportunity sizing according to the user problem and then select 2-4 user problems to prioritize for the quarter. Within those “themes” we allocate a certain number of bets or experiments we plan to take based on how many Engineers are on the team and our experiment velocity track record. We also allocate number of bets according to how much we’ve explored the user problem to date – pilot (a couple exploratory tests), double-down (put as many tests here as possible), and pivot (a couple exploratory tests in a different direction).